6 X-bar syntax

Learning Objectives

By the end of this chapter, you should be able to,

- identify intermediate levels (“bar levels”) using constituency tests

- understand the motivation for the X-bar schema

- draw a tree using the X-bar schema,

- identify the distinct positions within the X-bar schema,

- diagnose the difference between adjuncts and complements

- understand the basis of parametric variation, and how it can be explained

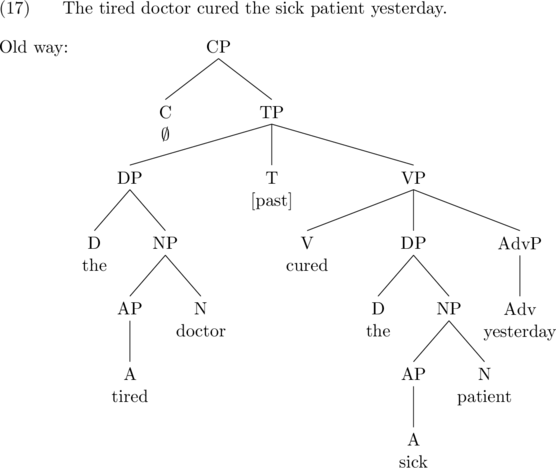

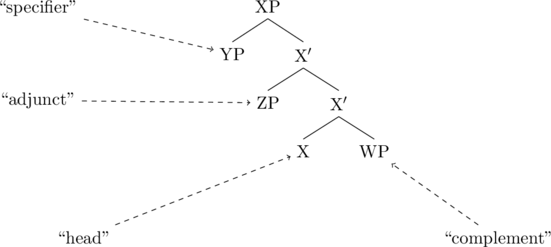

By the end of this chapter, we will have technically changed theories. We’re going from Phrase Structure Grammars to X-bar Syntax. But the trick is to recognize that, even though we’re going to be using the term “X-bar syntax,” we aren’t actually changing theories at all. The same principles, formalizations, and theoretical tools will be used in X-bar syntax that we’ve already been using. Our trees are just going to look more complicated. The reason that our trees will look more complicated is that we’re going to discover in this chapter that there are lot of “hidden” layers. Just like when we discussed functional categories we learned to infer the presence of structure, even when we can’t directly see it, we’ll use the same tools to infer the presence of “bar-levels.”

From this, we’ll make a theoretical leap. As a hypothesis about syntax, we’ll adopt a uniform structural representation for all phrases. This is “X-bar syntax.” It’s the hypothesis that all phrases share a structure, i.e., every phrase looks identical. The hypothesis makes a number of testable predictions, which we’ll explore in terms of the complement/adjunct distinction.

Deconstructing VP

Let’s start by looking again at English VPs. Assume the (very simplistic) rule for VPs below.

Simplified VP rule for English (to be revised)

- VP → V (DP) (PP)+

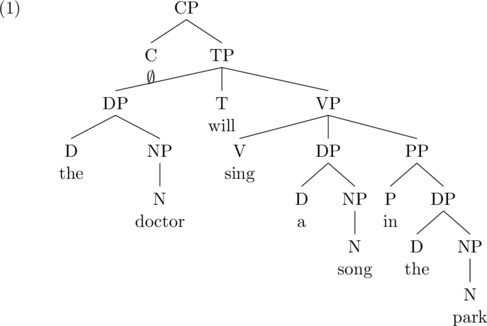

This rule allows us to minimally distinguish between transitive and intransitive verbs, each of which could have a modifying prepositional phrase. For instance, using this VP rule, here’s the structure for The doctor will sing a song in the park.

This tree correctly represents that in the park is a constituent. It also correctly represents that sing a song in the park is a constituent. However, it still is getting the constituency wrong. Look at the substitution test below. What does it show is a constituent?

![]()

What’s the problem here? The test demonstrates that sing a song is a constituent, because I am able to replace that string with do so. The tree in (1) gets this wrong. The string sing a song isn’t a constituent according to this tree because there is no node that contains the words sing a song and nothing else.

Is our constituency test just giving us a false positive? Let’s confirm. Below I’m applying the other constituency tests.

![Rendered by QuickLaTeX.com \setcounter{ExNo}{2} \ex. Fragments\\ What will the doctor do in the park? {\underline{Sing a song}} \ex. \a. \textit{It}-Clefting\\ {}*It was {\underline{sing a song}} that the doctor will do in the park. \b. Pseudo-clefting\\ {\underline{Sing a song}} is what the doctor will do in the park. \c. \textit{All}-clefting\\ {\underline{Sing a song}} is all the doctor will do in the park. \d. Topicalization\\ {}*{\underline{Sing a song}}, the doctor will do in the park. \ex. Coordination\\ The doctor will [ sing a song ] and [ dance ] in the park](https://opentext.ku.edu/app/uploads/quicklatex/quicklatex.com-b37054d43bc144b5a12a5cf0b13dbfe4_l3.png)

So our constituency tests are telling us that sing a song is a constituent—and that it’s a VP. Recall that substitution and coordination allow us to diagnose the category of the constituent. But here’s the thing: the string sing a song in the park is also a VP. How do I know? It passes all the same constituency tests, and we can detect its category through substitution and coordination.

![Rendered by QuickLaTeX.com \setcounter{ExNo}{5} \ex. Substitution\\ The doctor will {\underline{sing a song in the park}}, and the nurse will {\underline{do so}}, too \ex. Fragments\\ What will the doctor do? {\underline{Sing a song in the park}} \ex. \a. \textit{It}-Clefting\\ {}*It was {\underline{sing a song in the park}} that the doctor will do. \b. Pseudo-clefting\\ {\underline{Sing a song in the park}} is what the doctor will do. \c. \textit{All}-clefting\\ {\underline{Sing a song in the park}} is all the doctor will do. \d. Topicalization\\ {}*{\underline{Sing a song in the park}}, the doctor will do. \ex. Coordination\\ The doctor will [ sing a song in the park ] and [ dance at home ]](https://opentext.ku.edu/app/uploads/quicklatex/quicklatex.com-8502266fd346e9d58a9058465f7c3710_l3.png)

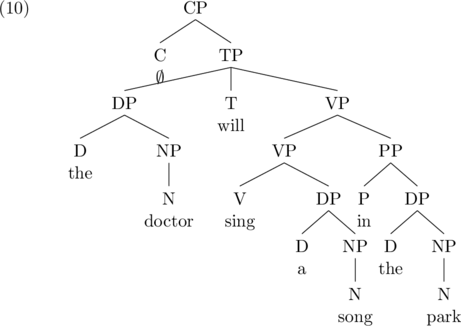

So based on this evidence, it looks like our tree should actually have the following representation.

This version of the tree correctly captures our empirical result: we have two distinct VP constituents. (It also correctly predicts that a song in the park is not a constituent, which you can confirm on your own.) The “trick” is that we’ve added a VP in-between the head and the highest VP. This projection is empirically motivated, though. It’s there because our tests tell us it’s there. (Note that technically, the highest VP violates the Headedness Principle.)

We call this intermediate projection the “bar” level, and write it either with a line over the top (V) or an apostrophe after (V’). It’s pronounced “V bar” (not “V prime”). This is just a notation for saying “I’m not the highest phrase in this phrase.”

To review: we’ve used our constituency tests to determine that there is a “hidden” level in verb phrases.

Let’s look at more data.

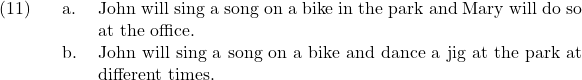

These data are just like the data above, except that I’ve added in more prepositional phrases. So now sing a song on a bike can’t be the highest VP in the structure. The highest VP is the entire constituent sing a song on a bike in the park. This is the topmost VP. Now in fact, we need two intermediate projections, because the constituents sing a song and sing a song on a bike and sing a song on a bike in the park are all VPs.

![]()

These data actually suggest that I can simplify my representation quite a bit, because they suggest that I can make do without the “+” symbol. All I need are three rules like the following. Note that three rules is actually simpler than one rule with a plus sign because now we can get by with less formalism. By analogy, 2 + 2 + 2 is simpler than 2 x 3 because it provides the same information with a less complex operator.

Simplified VP rule for English (revised)

- VP → V’

- V’ → V’ PP

- V’ → V (DP)

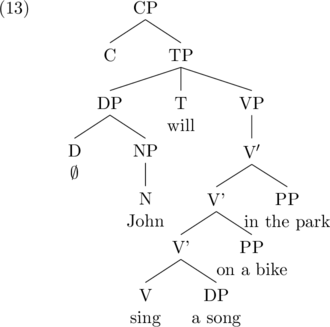

I’ve done a very tricky (but ultimately useful) thing here. I’ve explicitly added recursion into my English phrase structure grammar. There’s a rule that says V’ (i.e., an intermediate projection between the head and the topmost VP) can consist of itself and a prepositional phrase. Why does this work? Consider the tree for John will sing a song on a bike in the park which uses the new rule above.

When I build a VP now, I have an option: I can either add in an infinite number of prepositional phrases by adding in a new V’ level, or I can just go directly to the head V. I’ve essentially created a way to make an infinitely recursive loop—with an escape hatch. Most importantly, all of these intermediate projections correlate with distinct, identifiable constituents. That is, our structure correctly represents how English packages its information.

Deconstructing TP

We can do the exact same thing with TP. Let’s assume our rule for TP developed in the last chapter.

TP rule for English (to be revised)

- TP → DP T VP

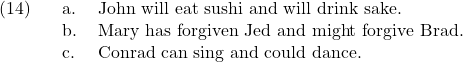

Now consider the following data, which suggests that there is a bar-level—an intermediate projection—in TP.

What is this data showing? It shows that I can coordinate will eat sushi with something else. That is, there appears to be a constituent that include T and VP, but does not include the subject. This is not possible, according to our rule above. To fix this issue, we can re-write our rule, parallel to what we did with VP.

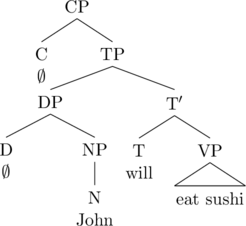

TP rule for English (revised)

- TP → DP T’

- T’ → T VP

Adding in this extra level correctly captures what our constituency test is telling us.

This tree now correctly captures the fact that T forms a constituent with VP, to the exclusion of the subject. That constituent is the bar level, T’.

What if I add in more words. Here I’m adding a modifier of TP.

![]()

This data now suggests that the modifier gets grouped together with T and the VP in the first example (because both constituents have a similar modifier), but in the second example shows us that T and the VP can form a constituent that is separate from this modifier (because definitely is describing both the eating and drinking events). How are we going to make this work? If Adverbs[1] like definitely, probably, etc may sometimes be distinct from the VP, and sometimes not, we need to adjust our rules about where adverbs go in TP.

Just like with VP, we can fix this issue concisely by adding a recursive T’ level!

TP rule for English (revised)

- TP → DP T’

- T’ → AdvP T’

- T’ → T VP

This set of rules allows us to add as many modifiers of TP as we want, and we will always find that each additional modifier “creates” a subconstituent within TP.

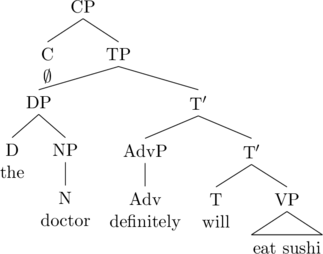

Deconstructing NP

We’ll do the exact same thing with NP. Keep in mind that we’ve adopted the DP-hypothesis, so all NPs are inside of DPs.

Simplified NP rule for English (to be revised)

- NP → (AP)+ N

With this rule in mind, consider the patterns of one substitution shown below.

![]()

We observed in the last chapter that one substituted for NP, but these data suggest that that isn’t quite accurate. One must be substituting for something between NP and N. Consider (16b). One is replacing the constituent blue book. This includes an adjective, so it’s not just the head N, but it excludes the higher adjective short/long, so this cannot be the entire NP.

We therefore find evidence in NP for an intermediate projection, and we re-write our rules accordingly. Now we can add in an infinite number of prenominal adjectives by adding in more intermediate projections.

Simplified NP rule for English (revised)

- NP → N’

- N’ → AdjP N’

- N’ → N

These rules correspond to the following tree.

Generalizing across categories

When we look across all of our rules, we can make a few generalizations:

- There’s always a rule of the form XP → X’, where the phrase level goes to the intermediate level. (Sometimes this rule has an element in front of X’.)

- There’s always a rule of the form X’ → X, where the bar-level consists of the head. (Sometimes the head is followed by something on the right.)

- There’s always a recursive rule of the form X’ →YP X’ or X’ → X’ YP, where YP is a modifier of some sort.

If this pattern exists for every single category, it suggests that we can generalize our schema for phrase structure. We hypothesize that every phrase has the following rules. The “X, Y, Z, W” below are variables meaning “any category.”

Generalized phrase structures rules (final version)

- XP → (YP) X’

- X’ → X’ ZP

- X’ → ZP X’

- X’ → X (WP)

If this is right, it means that all phrases are constructed based on these rules. In the rules above X, Y, and W are variables over categories.

What about the Headedness Principle? Have we entirely abandoned it? No! The Headedness Principle, which says that every phrase has a head and every head is in its phrase is exactly the same. But we’ve redefined what “phrase” means. Now a phrase minimally involves at least one X’-level. A phrase consists of all of the rules above: it is the X-bar schema. The term “phrase” is now a structural notion.

The X-bar schema is a hypothesis. By looking at a lot of data, we identify that there are intermediate projections in every phrase that we look at. The subsequent phrase structure rules all end up looking very similar—suspiciously similar in fact. The X-bar schema is a way to make sense of this information. It proposes that the similarities reflect a “deep” uniformity across syntax. Our trees end up looking more complex, because now everything needs at least one intermediate projection. But the complexity of the representations comes with an overall simplification of the theory: it isn’t necessary to learn a bunch of arbitrary rules for syntax. You only need the four above, because they apply to every phrasal category.

Trees in the X’ schema (length: 1m 28s)

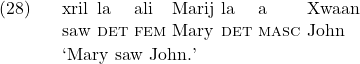

Note we also still need special rules for coordination, which look basically the same as before.

Rules for coordination (final version)

- XP → XP conj XP

- X’ → X’ conj X’

- X → X conj X

These rules allow you to coordinate heads (X), bar-levels (X’) and phrases (XP), but you can’t mix-and-match. You cannot coordinate a bar level with a head, for instance.

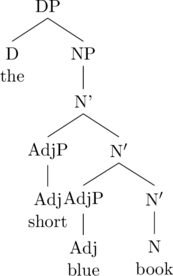

Positions in X’-syntax

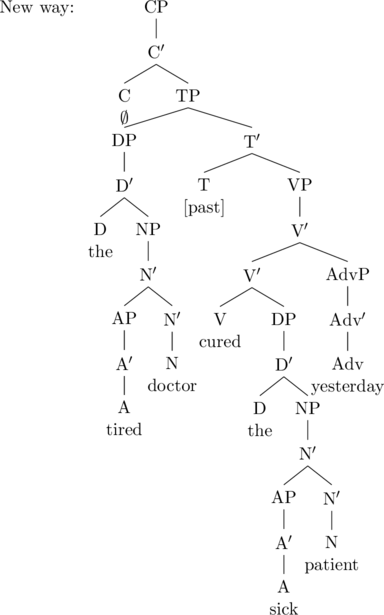

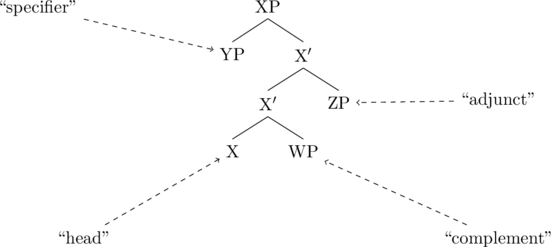

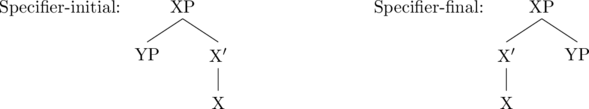

We define four positions within the X’-schema: specifier, head, complement and adjunct. The positions are indicated in the trees below.

- A head is the only non-phrasal terminal node in a phrase.

- A specifier is defined as, “The phrase that is daughter of XP and sister to X.” Every phrase that meets this description is a specifier.

- A complement is defined as, “The phrase that is sister to X and daughter to X.” Every phrase that meets this description is a complement.[2]

- An adjunct is defined as, “The phrase that is daughter to X and sister to X.” Every phrase that meets this description is an adjunct.

Note that the definitions above do not make reference to linear order. It doesn’t matter whether an adjunct is to the left or the right. All that matters is that its sister and mother are bar-levels. Likewise, complements can be on the left or the right of a head, as long as it is sister to the head. And a specifier can be on the left right as well, as long its mother is a phrase. Every language makes particular choices however about where specifiers and complements appear. We’ll return to this momentarily. For now, we note that in English, specifiers are always on the left, and complements are always on the right.

It is conventional to use the “genealogical” terms mother, sister, and daughter when referring to the relationships between elements in a tree. The terms are meant to be transparent: A “mother” is something that has “daughters.” If two things have the same mother, then they’re “sisters.”

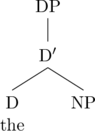

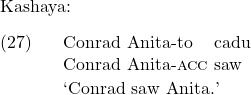

In the tree below, DP is the mother of D’. D’ is the mother of D and NP. D and NP are sisters, and are both daughters of D’.

The analogy basically stops there. There is no such thing as an “aunt” in syntax, or a “niece.”

We’ve seen a lot of complements. TP is a complement to CP. NP is a complement to D. DP can be a complement to V when it’s an object of the verb. The only specifier we’ve seen is the subject. It’s the specifier of TP. There is another place where a specifier is necessary: DP.

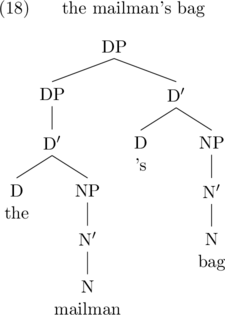

Possessive structure.

The other place where we observe specifiers is in possessive DPs.

We treat the possessive marker -s as a realization of D. This is because possessives are in complementary distribution with determiners: *the mailman’s the bag. And because the entire DP acts as a constituent referring to a bag, not a mailman, the head of the highest DP must be the possessive D.

Possessive DPs (length: 1m 51s)

Complements vs. Adjuncts

The X-bar schema illustrated above makes a prediction that some phrases that are next to a head are complements while some are adjuncts. They may look identical on the surface, but they derive from different structures.

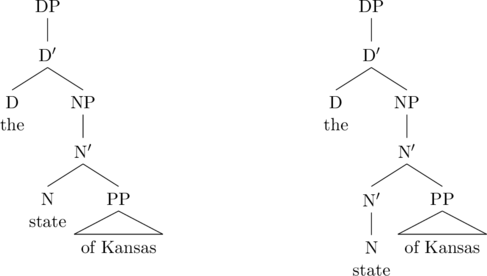

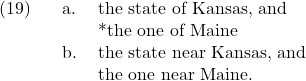

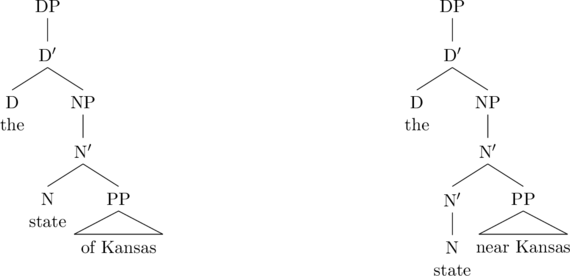

For instance, consider the phrase the state of Kansas. In the X-bar schema, this constituent (and it definitely is a constituent) could hypothetically correspond to either tree below. The prepositional phrase of Kansas could either be a complement to state (as in the tree on the left) or an adjunct to state (as in the tree on the right).

The two trees actually make distinct empirical predictions. We have three tests to distinguish between complements and adjuncts.

First, I just want to briefly mention that it is easy to confuse adjuncts and specifiers. When drawing your trees, remember that for every adjunct, you should have “plus one” bar-level, because adjuncts need both a bar-level which is a sister, and bar-level which is a mother. So you have to add a layer whenever you add an adjunct.

Adjuncts in X’-syntax (length: 2m 15s)

Substitution

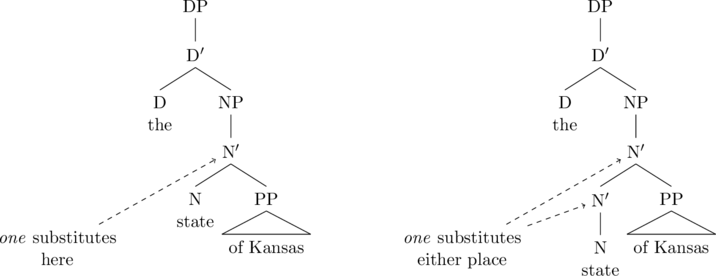

The constituency test of substitution has a different use. As we observed above, one substitution and do so substitution both target the bar-level. Since complements are under all bar-levels in a phrase, if you try to do substitution, a complement cannot be “left behind.” An adjunct can, though, because there’s always a bar-level that doesn’t include the adjunct.

Visually, consider the two trees again. If the PP is a complement to N, then when you substitute with one everything under N’ has to go too. In contrast, if the PP is an adjunct, it’s possible to substitute with one and leave behind the PP.

So to use the substitution test to determine if something is a complement or adjunct, you simply replace the head of the phrase, and see if you can leave behind the constituent in question.

Compare the sentences below.

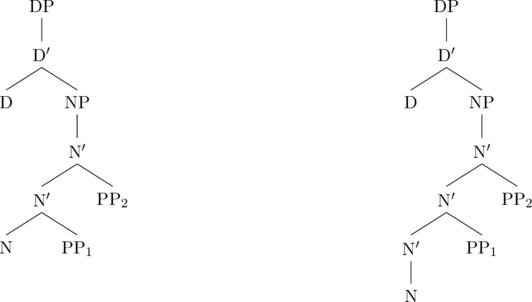

As illustrated above, one-substitution crucially yields an ungrammatical result in (19a), but not in (19b). Why is this? Again, note here that one-substitution targets bar-levels; you can only replace the material that exists under a bar-level. As such, the reason for the grammaticality distinction in the examples above is that the NPs, the state of Kansas and the state near Kansas, crucially differ in the number of bar-levels they contain. That is, unlike in the NP, [ NP state of Kansas ], where one-substitution can replace both N and PP (20a), but not N alone (20b), with the NP, [ NP state near Kansas ], one-substitution can either replace the larger constituent containing N and PP (21a), or N alone (21b), meaning that the NP in (19) must crucially contain two bar-levels, and the NP in (20), only one.

![Rendered by QuickLaTeX.com \setcounter{ExNo}{19} \ex. I visited the state of Kansas. \\ \a. I visited the \textit{one}. \hfill ( targets: [ \textsubscript{N'} [\textsubscript{N} state ][ \textsubscript{PP} of Kansas ]] ) \\\b. *I visited the \textit{one} of Kansas. \hfill ( targets: [ \textsubscript{N'} [ \textsubscript{N} state ]] )\\ \vspace{3mm} \ex. I visited the state near Kansas\\ \a. I visited the \textit{one}. \hfill ( targets: [ \textsubscript{N'} [\textsubscript{N} state ][ \textsubscript{PP} near Kansas ]] )\\\b. I visited the \textit{one} near Kansas. \hfill ( targets: [ \textsubscript{N'} [ \textsubscript{N} state ]] )\\](https://opentext.ku.edu/app/uploads/quicklatex/quicklatex.com-be4bd6ad1c2f59432699e2e0e24262fc_l3.png)

Since we have defined a complement as being the sister of the head that selects it, and the daughter of the bar-level that contains that same head (i.e. a head and its complement share a bar-level parent), while conversely defining an adjunct as being both the sister to a bar-level and the daughter to a bar-level, the fact that one-substitution can target two different constituents in (21) means that the NP, the state near Kansas, necessarily contains two bar-levels, and, therefore, must contain an adjunct. That is, the PP, near Kansas, must be an adjunct because there are two different targets (i.e. bar-levels) for one-substitution in (20); one can replace the lower bar-level to the exclusion of PP, i.e. [ N’ [ N state ]], or it can replace the higher bar-level that includes both the lower N’ and PP, i.e. [ N’ [N’ [ N state][ PP near Kansas ]].

Step-by-step walkthrough of the substitution test

- Identify the head in question and the phrase which you want to test

- Identify the appropriate substitution word for the head.

- Replace the head with the substitution word, but don’t replace the phrase you’re testing. (Note that technically, you’re substituting at the lowest bar-level, which contains the head. The lowest bar-level could, in principle also have a complement.)

- Check the grammaticality. If it’s grammatical, the phrase is an adjunct. If it’s ungrammatical, the phrase is a complement.

Substitution test for complements vs. adjuncts (length: 1m 6s)

Substitution test for complements vs. adjuncts (length: 1m 9s) Credit Damian White Lightning

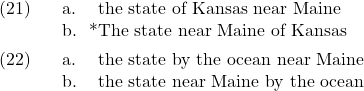

Reordering

Complements must appear as sisters to the head, and so they have a fixed position with respect to other phrases. But adjuncts can, theoretically, be reordered. This is because all that’s necessary is that they have a mother that’s a bar-level and a sister that’s a bar-level.

For instance, consider the following trees which each have two prepositional phrases. The tree on the left treats PP1 as an complement and PP2 as an adjunct. If we switched their positions, then we switch their roles.

In contrast, in the tree on the right, both the PPs are adjuncts. It shouldn’t matter whether we switch their positions, because they both will still be adjuncts.

What this means is that if a constituent is a complement, it cannot be reordered with another constituent. If a constituent is an adjunct, it can be.

The difference can be shown empirically in the following examples. You can’t reorder of Kansas and near Maine, suggesting that of Kansas is a complement to state. In contrast, you can reorder by the ocean and near Maine, so we conclude that by the ocean is an adjunct to state.

Step-by-step walkthrough of the reordering test

- Identify the head and the phrase which you want to test.

- Choose an appropriate phrase that can appear with the head and phrase above.

- Reorder or the two phrases with respect to the head.

- Check the grammaticality. If it’s grammatical, the phrase is an adjunct. If it’s ungrammatical, the phrase is a complement.

Reordering test (length: 1m 2s)

Reordering test (length: 1m 6s) Credit: Damian White Lightning

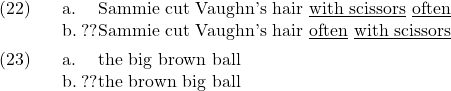

Sometimes we can’t reorder phrases for semantic reasons, independent (presumably) of syntax. There happens to be a fixed order for modification that is fairly robust cross-linguistically. For instance, temporal modifiers (things that deal with “time”) tend to be merged higher (or “outside”) of manner modifiers (things that describe “how” the event was accomplished). Likewise, there is a fairly fixed ordering of adjectives in English (and many languages).

Note that there is a corollary to the reordering test, which is that complements are always strictly adjacent to the head. So if you can’t reorder something, then the phrase that is closest to the head must be a a complement. If you can reorder something, then both phrases are adjuncts.

Iterability

If you remember the “trick” we did when we defined the X’-schema, we said that we could have an infinitive number of bar-levels, depending entirely only how many we needed. Practically, what this means is that a head can have multiple adjuncts. In more formal terms, adjuncts are iterable. In contrast, because there is only head per phrase, there can only ever be one complement. The distinction is empirically shown in the following data.

![]()

Step-by-step walkthrough of the iterability test

- Identify the head H and the phrase XP you want to know about.

- Identify choose another phrase of the same category as the phrase XP you’re testing.

- Add your new phrase at the end.

- Check the grammaticality. If it’s grammatical, the original phrase is an adjunct. If it’s ungrammatical, the original phrase is a complement.

Visually, here is what’s going on. In the tree on the left, there are two prepositional phrases, of Kansas and of Missouri. The prepositional phrase of Kansas is occupying the only complement position in NP. Of Missouri “wants” to be a complement—but it can’t! Of Kansas already took that place. The phrase *the state of Kansas of Missouri is ungrammatical because of Missouri is forced to be an adjunct (sister to bar-level, daughter to bar-level), when it wants to be a complement (sister to head, daughter to bar-level). But in the tree on the right, both PPs are adjuncts. The definition of an adjunct is that it have a bar-level as sister and a bar-level as mother—and we can have as many bar-levels as we want! So therefore, we can have as many adjuncts as we want.

A little more under-the-hood mechanics: this test is really asking about the semantic relationship between a head and some constituent. Heads tend to semantically require complements. For instance, a state in the US is always “of” something—i.e., it always has a name. But a state in the US is not necessarily “near” another state (e.g., Hawaii). Or put differently, being near another state is not intrinsic to being a state. But having a name is intrinsic to being a state. “Intrinsicness” often (though not always) correlates with complementhood.

Predication

The final test to distinguish a complement from an adjunct involves another kind of transformation. In this case, we are again testing the semantic relationship between the head and the phrase we testing. The idea behind this test is that adjuncts are semantically closely to the linguistic notion of predicate. Intuitively, predicates are things that need subjects. So verbs (or really verb phrases) are predicates. Adjectives can also be predicates, like in The tree is tall. Here the adjective tall is the contentful part of the sentence that is not the subject (the tree). (The copula is is usually taken to be meaningless—though that is a gross simplification.)

Importantly, there are certain parallels between predicates and adjuncts. For instance, it is largely true that adjectives that act as predicates can also act as adjuncts. For instance, the adjective tall can be a predicate, the tree is tall and it can also be an adjunct, the tall tree. (We call this attributive modification.) We can use the fact that there is a relationship between predicates and adjuncts by seeing whether the phrase we are testing can be a predicate. As a concrete example using our two test cases, consider the following sentences.

![]()

What this test shows us is that near Kansas can be a predicate, while of Kansas cannot. Because there is a natural link between predicates and adjuncts, we conclude that near Kansas is an adjunct in the state near Kansas. (Note that we don’t conclude that it’s an adjunct in The state is near Kansas. That is, the test tells us about our original phrase.)

Step-by-step walkthrough of predication test

- Identify the head (H) and the phrase (XP) you want to know about.

- Put the two pieces in the frame H is XP. (You can use any form of the verb be.)

- Check the grammaticality. If it’s grammatical, XP is an adjunct (in the original phrase). If it’s not grammatical, XP is a complement.

Unfortunately, this test can be little tricky when it comes to verbs. Here’s the issue. Let’s compare these two sentences, and ask what the syntactic relationship is between the verb and the word following the verb.

![]()

If we brute force apply our test, we’re going to end up with nonsense in both cases: *James runs is races and *James runs is fast. This doesn’t tell us anything. The problem here is that runs is already a predicate, which means it can’t be a subject. There are ways to get around this issue—but none of them is foolproof. Among these, perhaps the safest is to turn the predicate runs into something else, like a noun. Here’s the test again, but this time I’ve nominalized the verb (i.e., made it into a noun), and included the original subject James as a possessor.

![]()

I’ve turn James runs into something that can be a subject: James’ running. This is a noun—technically a DP. Now when I apply my test, I see that fast acts like an adjunct, while races does not. Summarizing, if you want to apply the predication test for complements and adjuncts to a verb and following phrase, you might have to nominalize the verb.

Based on the four tests above, we find that of Kansas is a complement to state. So the following is the correct representation:

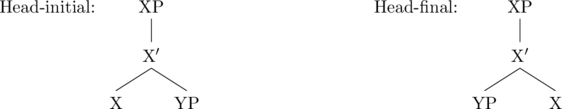

Parameters

The order of the specifiers and complements is fixed for any one language. What does that mean? For instance, in English, the complement always comes after the head. That is, English is head-initial. With specifiers, the specifier always comes before the head. English is specifier-initial.

However, logically for each position, there are four ways things could be.

- Specifier-initial : The specifier is to the left of its head (meaning to the left of the bar-level).

- Specifier-final : The specifier is to the right of its head (meaning to the right of the bar-level).

- Head-initial : The head is the left of its complement.

- Head-final : The head is to the right of its complement.

It turns out that languages make different choices as to how they order their heads and specifiers (and adjuncts). This is called parametric variation. English is specifier-initial and head-initial. Thus, in “neutral” contexts, it will have the order of Subject-Verb-Object, or SVO.

Kashaya (a Pomoan language spoken in Northern California) has set its parameters to specifier-initial and head-final. So it’s basic word order is “Subject-Object-Verb,” or SOV.

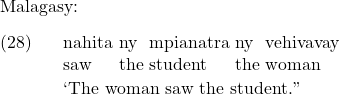

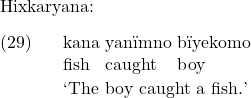

Malagasy (an Austronesian language spoken on Madagascar) has set its parameters to specifier-final and head-initial. It’s basic word order is “Verb-Object-Subject,” or VOS.

Lastly, Hixkaryana (a Carib language spoken in Brazil) has set its parameters to specifier-final and head-final. So it’s basic word order is “Object-Verb-Subject,” or OVS.

If you’re counting, you’ll notice that I’ve said nothing about whether the two other logically possible words orders of Subject, Verb, and Object exists: VSO and OSV. These cannot be straightforwardly derived by simply stipulating specifier and head directions. Something more (i.e., movement) must be added to the theory.

Nonetheless, K’iche’ (a Mayan language spoken in Guatemala) has a basic word order of “Verb-Subject-Object,” or VSO.

OSV is incredibly rare. This points to a very interesting distributional fact: while all possible orders exist, they don’t all exist with the same frequency. SOV is the predominant word-order cross-linguistically, accounting for about 40% the world’s languages. Next is SVO, accounting for about another 35%. The other categories account for the remaining 25%.

Interestingly, languages tend to be largely consistent in their head and specifier settings. That is, English is generally head-initial and specifier-initial. Kashaya is generally head-final and specifier-initial. Same with Malagasy and Hixkaryana.

It was proposed that the theory of syntax, coupled with a universal set of parameters can account for the entirety of the world’s languages. That is, suppose that there is a finite number of “switches,” and each language has a unique cumulative setting for each switch. This was (and still is) a popular idea. Even if it’s not entirely correct, it’s still a very handy way to think about language variation.

Parametric Variation (length: 2m 39s) Credit: Sydney Pritchard

Recap and a look forward

This ends the first part of this book. We’ve built a theory of syntax: X-bar theory. We did so by looking at data, and thinking about how that data tells us. In the end, we decided that the X-bar schema was an efficient way to capture observed patterns in syntactic structures. It is worth reflecting again on what the benefit of the X-bar schema is. It is no longer necessary for us (as researchers) to try to figure out the “VP” rule, or the “NP” rule, because in fact, they’re the same rule! It’s just the X-bar tree.

Even more, consider the task of language acquisition. Every baby is confronted with a huge challenge: acquiring the intricacies of language. That challenge is greatly simplified if the baby intrinsically knows that everything they’re hearing conforms to X-bar syntax. The baby doesn’t have to learn about all the different things that can go in VP, nor all the different things that can go in NP. For the most part, the baby doesn’t have to figure out the constituency of the words they are hearing, because the constituency is essentially dictated by the X-bar schema (in most cases). This greatly simplifies what a baby needs to learn to acquire a language — any language. All they need to figure out (besides the words) are the parameters. Is the language head-initial or head-final? Is the language specifier-initial or specifier-final?

From here on out, we’re going to stick with the X-bar schema; we won’t make any more changes to the structure that we use. Going forward, we’re going to start talking about manipulating structure. What happens when things start to move around?

Key Takeaways

- Intermediate levels are found across all phrases.

- The X-bar schema is empirically motivated by looking at constituency

- The X-bar schema defines four positions: head, complement, specifier, adjunct.

- Complements and adjuncts can be empirically distinguished through three tests.

Advanced

The X-bar schema only permits maximally binary branching trees. That is, according to the X-bar schema, a node can have at most two daughters. It can have fewer than two daughters, i.e., one or no daughters. But it cannot have three daughters.

In light of this constraint, we should revisit two of our phrase structure rules which seem to require a node to have three daughters. Recall our rules for coordination:

- XP –> XP conj XP

- X’ –> X’ conj X’

- X –> X conj X

It’s not immediately clear how to map these rules into X’-syntax. Is there any way to demonstrate constituency that is consistent with binary branching structures? It turns out that there are a few tests that suggest an “asymmetric” constituent structure. Consider the following minimal, which involve (apparent) rightward movement of part of a coordinated element.

![]()

This data show that the conjunction and groups together with the second conjunct, not the first.

Additional data comes from what is called Left-Branch Extraction. The basic observation is that sometimes you can move (something inside of) the leftmost part of a coordinate, but nothing in the rightmost part. An illustrative example comes from Japanese.[3] (The underlines indicate where the first word has moved from.)

![Rendered by QuickLaTeX.com \setcounter{ExNo}{30} \ex. \ag. ringo-o Taro-wa [{\underline{\hspace{10pt}}} san ko] to [banana-o ni hon] tabeta\\ apple-\textsc{acc} Taro-\textsc{top} {} three \textsc{cl} and banana-\textsc{acc} two \textsc{cl} ate\\ \trans `Taro ate three apples and two bananas.' \bg. *banana-o Taro-wa [ringo-o san ko] to [{\underline{\hspace{10pt}}} ni hon] tabeta\\ apple-\textsc{acc} Taro-\textsc{top} apple-\textsc{acc} three \textsc{cl} and {} two \textsc{cl} ate\\ \trans `Taro ate three apples and two bananas.'](https://opentext.ku.edu/app/uploads/quicklatex/quicklatex.com-67e9ad45b8d50d03ac0daa0efcca9334_l3.png)

While an analysis of Left Branch Extraction is beyond the scope of this class, what we probably should conclude is that in coordination, the head of the coordination, i.e., and forms a constituent with the second element, to the exclusion of the first. It’s possible therefore to translate our phrase structure rules for coordination above into the following X’ compliant trees.

This tree treats the first coordinate as a specifier, and the second as a complement. And crucially, it groups the head of ConjP together with the second coordinate. Thus, there is motivation for making even coordinated phrases abide by X’-structure.

- Note that the term "adverb" is a bit misleading: this aren't "adding" to the "verb". ↵

- FYI Complement is spelled with an 'e'. It is not compliment. ↵

- Bošković, Željko. "On the coordinate structure constraint, across-the-board-movement, phases, and labeling." Recent developments in phase theory (2020): 133-182. ↵

A transitive verb has two arguments, a subject and an object.

Intransitive verbs only every appear with a subject, and never appear with an object. In theoretical terms, an intransitive verb is a verb that c-selects for only one argument.

Something is recursive if it is a process that applies to itself. Practically, in syntax, something is recursive if it is a category that contains the same category (e.g., PP inside of PP, N inside of N, etc).

The DP-hypothesis proposes that all NPs are encased in a DP "shell."

A terminal node is a node that ends in a lexical item, i.e., a word or a morpheme. Phrases and bar-levels are not terminal.

X is the daughter of Y if X is directly under Y (is immediately dominated by Y). This relationship can also be stated as "Y is the mother of X."

X and Y are sisters if X and Y share the same mother---that is, if they're both directly under the same thing (immediately dominated by the same phrase).